With the increasing adoption of high-tech business solutions, AI services development is taking over everything and big names like ChatGPT and Claude have become every person’s daily companion. To be able to work their best these modules require huge memories and computing power. However if you wish to make them smaller and faster without compromising their brainpower, you would require Model Quantization.

This blog post is crafted to help you understand this concept, how it works, its types and how commonly it is used in large language Models (LLMs).

Large Language Models (LLMs)

Large Language Models refer to AI systems that help understand and generate human-like content language. They’re trained using huge datasets and built with billions of parameters (which are like tiny adjustable knobs that help the model “learn”). More parameters make the model smarter and allows it to do more but it also means the model becomes very large in size.

The Concept of Model Quantization

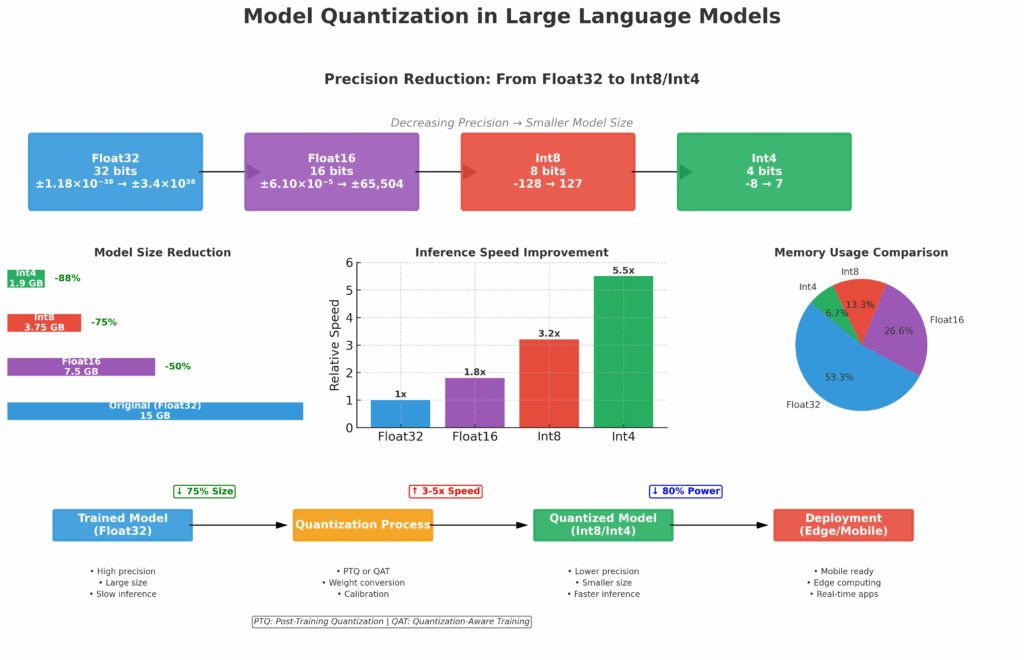

This is used to make AI models smaller and faster by reducing the precision of numbers they use. LLM models are trained to use numbers known as “float 32” format, also referred as 32-bit floating point representation. They’re very specific and precise numbers but are slower to process and take up more memory. This means each number uses 32 bits to store.

A floating point number has two parts:

- Mantissa (the digits of the number)

- Exponent (tells how big or small the number is)

So when we talk about model quantization, we usually mean switching from float32 to formats like:

- float16 (half precision)

- int8 (integer with only 8 bits)

- int4 (even smaller)

The goal is to use fewer bits per number but still keep the model working correctly.

What this method does is change these float 32 numbers into smaller “int8” numbers known as 8-bit integers or int4 which is even smaller. This “int8” helps models run on portable small devices like laptops and phones with increased speed and less memory usage.

Another concept of quantization is quantization in machine learning which refers to the application of quantization to the whole process including training, data and model on each step of the way.

Think of it as you are baking a cake and from the start you use lighter tools, ingredients and even lighter measurements to ensure a lighter cake – Quantization in Machine Learning.

Whereas if you bake the cake using all the proper ingredients in their full measurements but then you need to store it in a small box you might need to press it, take off the edges, or leave frosting to shrink and fit it in that box, this is what Model Quantization does.

Importance of Model Quantization

Quantization is very useful and important specifically for large language models because it helps reduce model size for easier storage and forwarding, has faster interference running on devices, uses less power so is great for devices running on battery and enables edge computing which helps use the AI module without internet access as well. In simple words, quantization helps AI models work smart without being heavy.

Types of Model Quantization

Model Quantization can be applied in many ways. Some of the most common types in LLMs are:

1. Post-Training Quantization

True to its name, post-training quantization takes place after the model is trained. It is a fast and easiest type that takes a ready, completely trained model then changes its weights and sometimes activations as well to a lower-bit format. This process of quantization is like compressing an already created file to shrink it without changing its contents.

Pros:

- No need to retrain the model

- Quick to apply

- Works well for many use cases

Cons:

- Sometimes the model loses accuracy, especially if you move from float32 to int8 directly

2. Quantization-Aware Training (QAT)

This is a more advanced method where you train the model with quantization in process instead of carrying out quantization after training. This way the model learns to adjust itself accordingly to be able to handle lower-precision numbers accurately.

For example, you train someone to write with a pencil without using an eraser, so that they adjust to it while training, avoiding future struggles.

Pros:

- Higher accuracy after quantization

- Better for sensitive models like language models or image recognition in healthcare

Cons:

- Takes more time

- Needs more computing power

3. Activation-Aware Weight Quantization

This process of model quantization is very useful but a little technical. During quantization the developers usually focus on the weights of the model however there is something known as activations that require quantization as well. Activations are values passed between layers when models run.

In this process of quantization developers’ look at both how activations behave and how weights interact with them. This in-depth research allows you to implement smarter model quantization rules, keeping the model’s accuracy top notch even after using low-bit formats like int8 or int4.

In simple words instead of shrinking weights blindly, we look at the full picture and then decide how to quantize.

Real-World Example of Model Quantization

Let us give an example of a language model like ChatGPT, the most used AI today. You trained it on a huge database so it gives the best answers and replies, users are satisfied with its work however the model file is huge such as 15GB in size, this restricts the usage of the model on a mobile as it is too big.

Hence by applying Model Quantization you reduce the size from 15GB to 2GB, this enables faster loading of the app, quick replies from the model and no big changes in the outcome experienced by the users. This is why companies like Meta, Google, and OpenAI use quantization to make models mobile-friendly.

Model Quantization and Accuracy

A very common question by the users is “does the Model Quantization affect accuracy?”

The answer to this is YES, it does but not at all times in big ways that may affect your work.

When you make something less detailed, you are bound to lose some sharpness or clarity. Yet, if you do thorough research and choose the right and perfect methods of Model Quantization such as quantization-aware training or activation-aware quantization, then the lack of accuracy tends to be very small. In this fast moving real word scenario, the size and speed advantages are worth the cons.

When Should You Use Quantization?

You can consider about LLM Quantization when:

- Your model is heavy and it is your only solution.

- You want to run large models on gadgets like phones or tablets.

- You want faster response time

- You’re deploying models in limited memory environments

- You need to save costs on cloud servers

Quantization is Useful For Certain LLM Applications

Essentially, model quantization can be put as setting your AI model on a diet to make it smaller, lighter, and faster, though still smart. To make large language models functional and productive in the actual world, quantization plays a key role. It helps you run your apps smoothly and effortlessly especially if you are working on models like voice assistant, image recognizer or Chatbot.

Due to an increase in large language modules the need for quantization has also increased. To maintain a balance between feasibility, realism and power, Model Quantization is the first choice for developers. So if you encounter any obstacle of a model being too big to run, use the right type of quantization to solve your issue. Need help implementing model quantization in your AI projects? Contact our AI experts today.